The Panasonic Lumix LX3 in 2024

Smartphones have rendered most pocket digital cameras obsolete by now but there's been a resurgence of popularity among the younger end of Gen Z, at least allegedly. I can certainly see the appeal in a disconnected imaging device that's not constantly dinging with notifications. In that spirit I decided to go through some of the vintage digital cameras I have laying around to see how they hold up, especially with modern RAW processing software like Darktable. This is the first in that series, or at least what I hope becomes a series.

The LX3 was a bit of an atypical bridge camera for 2008 with it's f/2.0 lens on the wide end (f/2.8 on the tight end of the zoom). It still had the 1/7" common in other bridge cameras at the time, but Panasonic was one of the first to step back from the megapixel wars and stuck with only 10MP. It was definitely lower noise for the time although these days any recent smartphone will have cleaner output with better dynamic range through things like stacking multiple exposures in software.

The main appeal of this camera to me, besides the disconnected nature, is the fact it has physical buttons and a grip that fits better in the hand than flat smartphone. Having actual optical zoom is nice as well. While I wouldn't run out and buy this camera today, especially if any trends have driven the price above about $20-30 USD, it does OK when put in RAW mode and the files are tweaked in Darktable. For more you can check out my YouTube video here:

Also, here are a few edited photos from the camera. It really does make a decent pocket camera even today. These aren't meant to be the greatest photos ever, just day to day shots.

Fulfilment and Happiness

People often chase happiness in life and social scientists seem to measure individual or collective happiness as some kind of measure of how well a particular demographic, country or other group is doing. Meaning and fulfilment are completely different things from happiness but are often confused for one another. Sometimes the path to fulfilment or self realization can lead to happiness but are often the path there is filled with tribulation and stress.

Judging a fulfilled life is hard to do. Someone can sit and play games or watch TV all day and be perfect happy doing so but after some time for most people there's an emptiness or a lack of accomplishment. Hedonism is ultimately empty, often does not lead to positive social reinforcement from one's peers. You can only sharpen a sword against stone and smooth seas do not make for a skilled sailor. Generally individual humans yearn to be useful to the clan, tribe, troupe, church, country or other social group and that requires making a contribution or having something meaningful to bring to the table. That in turn means work or self-improvement.

Happiness is an end state that can result from stimulation or work and reflection. Stimulant happiness is usually gone once the source of entertainment is removed and leaves one longing or chasing that feeling again. Happiness from fulfilment can sit with someone in an empty room.

Do not chase simply feeling good, chase meaning and fulfilment. If it's difficult to do chances are it's the right direction.

Disable spell check highlighting in Vim for Japanese and Chinese languages

I still use Vim for most of my writing and TeX preparation and over the last few years I've been doing more and more reading and writing in Japanese. Unfortunately the Vim spell check algorithm does not support Asian languages so it will just highlight any and all Japanese characters as spelling mistakes. This makes the document hard to read while editing and is just generally annoying. After digging into the documentation I found that spelllang has an option for handling this:

set spelllang+=cjk

Combining this with what was already in my .vimrc file gave me this:

autocmd BufRead,BufNewFile *.md setlocal spell spelllang+=en_us,cjk

autocmd BufRead,BufNewFile *.txt setlocal spell spelllang+=en_us,cjk

autocmd BufRead,BufNewFile *.tex setlocal spell spelllang+=en_us,cjk

This way when a text, markdown or TeX file is opened with Japanese characters they will be ignored but spell checking for English words will still occur.

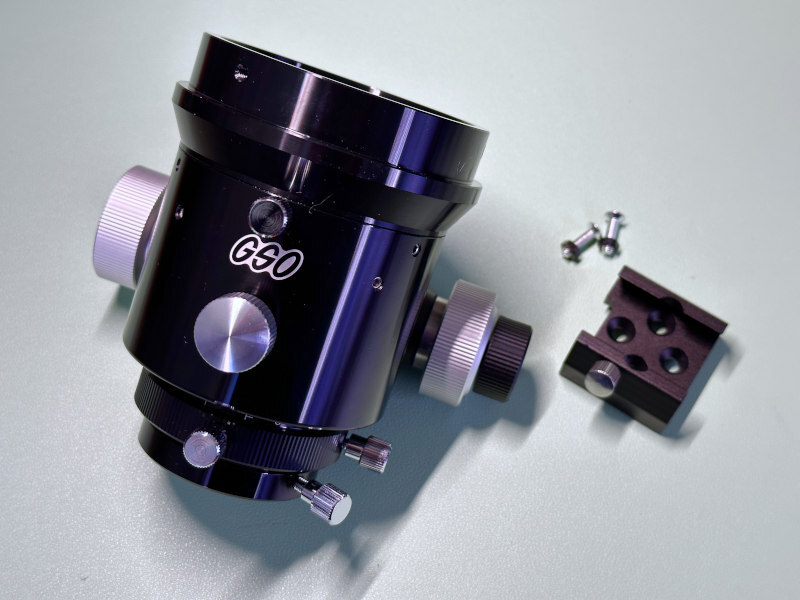

Orion ST80 GSO Focuser Upgrade

Apologies for the quality, I just shot these on my phone.

About twelve years ago when I had started to recover from graduate school burnout my wife bought me an Orion ST80-A as a way to get me back into astronomy. At the time just the optical tube was under $200 and it was quite the bargain for an ultra portable telescope. I had wanted one back in middle school in the 90s when they came out and to this day I still do like a refractor despite their price and impracticality.

Since then I've accumulated a few other telescopes and this one sites idle most of the time. The one exception is solar viewing since I have a white light filter for this diameter scope. Plus I tend to do solar viewing in outreach type scenarios so having the smaller scope is nice for stowing in my office or leaving in the car. It also doesn't need much of a mount and I can usually get away with my larger photo tripod along with a Wimberly style gimbal head I use for larger telephoto lenses.

The most disappointing thing about using this telescope has usually been the focuser. It's a single speed rack and pinion that isn't the smoothest brand new and now with a little over a decade it's only gotten stiffer and less fun to use. Fortunately GSO makes a two speed linear Crayford focuser that is a direct fit replacement for the original. For the ST80 and it's mini variants, the same telescope was sold under many brands including Orion and Celestron, you'll want the 86mm version of the focuser. These days due to inflation and whatnot sadly these aftermarket focusers have creeped up a lot in price, to the point where they almost cost more than the telescope itself. Still for such a quality of life upgrade I think it's worth it.

As a bonus this is alost a rotating focuser so you can change the angle of the eyepiece. That's almost worth the cost of admission on its own. The GSO focuser is a bit heavier than the stock version and you'll need to buy a mounting shoe if you want to keep using the stock finder but those are about then only downsides. The stock focuser only accepts 1.25" eyepieces and the GSO will take 2" parts. With this scope I'm not sure that's really needed or wise, but it does make it easier if I ever want to do imaging through it since most of my parts for that are based on the 2" standard. I have done imaging with the ST80 before and being a lower priced archomat designed in the 90s it's what I'd call serviceable at lower magnifications and lower contrast objects.

I honestly think this is a worth while upgrade if you've got one version or the other of the ST80 laying around. It improves the usability quite a bit and that's probably 90% of what makes a telescope good in my book. I was looking at swapping out the grease on the old rack and pinion focuser which is a bit of a chore. But with a Crayford working off friction that is no longer an issue either.

Maybe the prices on the aftermarket parts will come down at some point or you can wait for a sale. I was trying to target using this for the eclipse but between cloud cover and a last minute illness I wasn't really able to get out and put it to use. Hopefully once the weather clears I'll be able to use it a little more, now to look on the used market for hydrogen alpha telescopes people are trying to pawn off after the eclipse!

Darktable vs Camera JPG: why are the colors different?

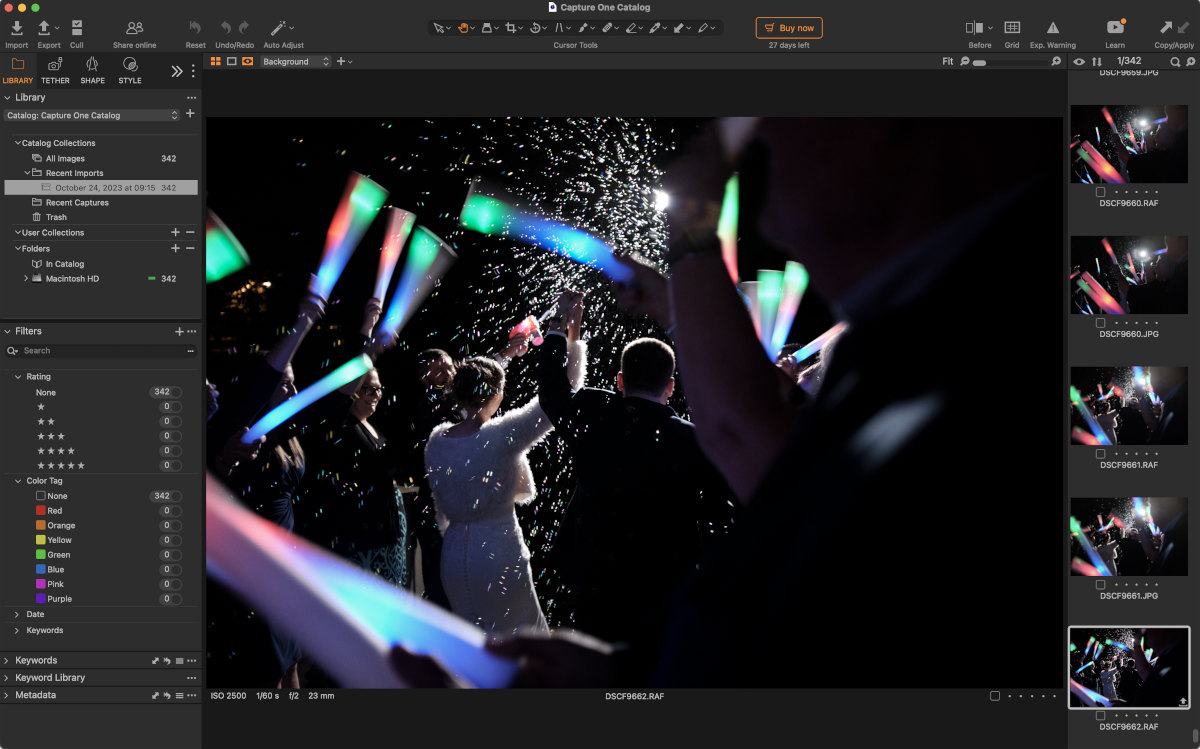

Straight out of camera JPG, Fuji X100F

Color is weird, it only really exists in our heads and there has been many tomes written about how to represent it and how to process it, especially in digital imaging. Something that seems to come up often with RAW photo processors in general and Darktable in particular is around why the colors end up looking so different compared to what the camera produces in a JPG.

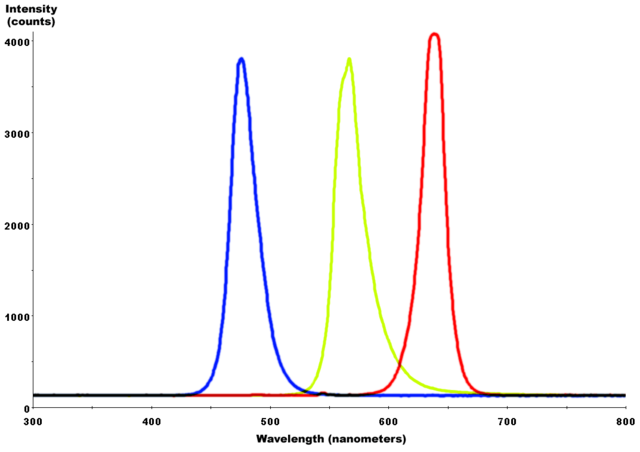

Above is a JPG straight out of my Fuji X100F on the Classic Chrome setting. It looks nice, but there's a problem brewing. Looking at the LED light sticks one notices a very cyan or teal colored center in the blue LED section. These were very cheap LED light sticks and contained one red, one blue and one green LED as far as I could tell. LEDs are generally a very narrow spectrum light source too, individual LEDs only produce a very specific wavelength of the visible spectrum so it's impossible for this single blue LED to produce teal or cyan. White LEDs can be made by mixing several different color LEDs together behind a diffuser or with a UV LED and a flourescent coating. But the regular low cost LEDs in applications like these are most definitely not those, so where's that cyan coming from?

Typical spectral output of blue, green and red LEDs respectively. Notice how narrow the spectral bandwidth and how high the peaks are.

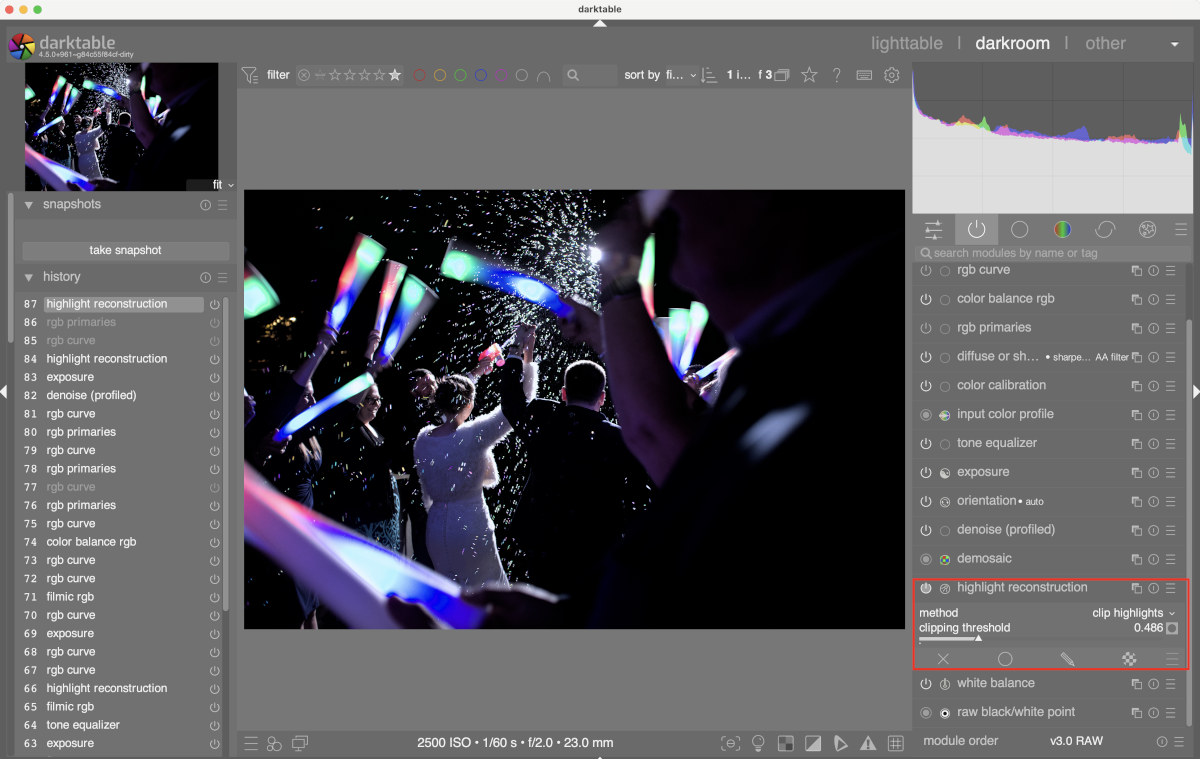

Things only get more confusing once the RAW file is loaded into Darktable.

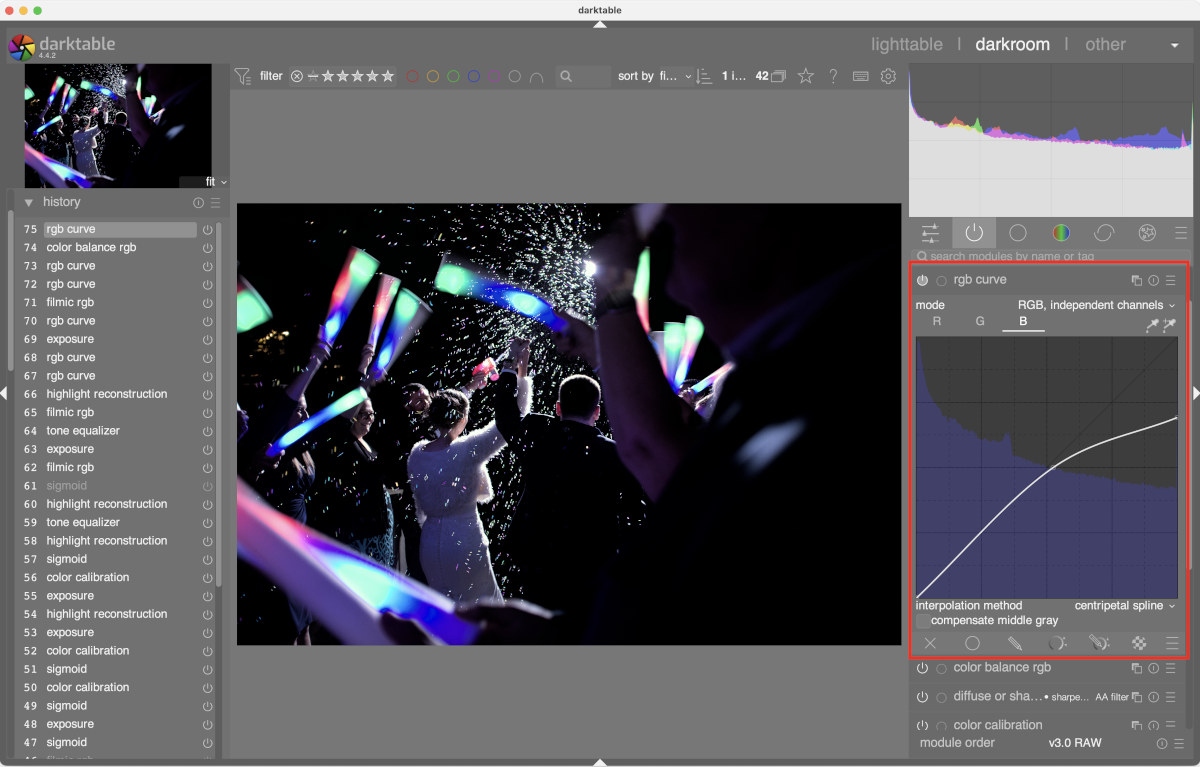

Default Darktable 4.4 processing, Filmic v7

Gone are the teals and cyans but now now there's this harsh blue gradient. Frankly it looks like garbage. "Good grief, hoodwinked again, I guess you get what your pay for, it's free so it can't be good" one might think. Except that in this case Darktable is showing you the more technically correct representation.

If one were to think about the color property of shade and draw out dark shades of a blue to a light shade of blue it might look something like below. As the color lightens up it get progressively closer to white until it falls into pure white. There's not a pixel of cyan or teal in that strip of color below. Those are different hues and cannot fall out of a purely blue light source by just increasing the exposure.

Loading the file into Capture One, Fuji's RAW processor of choice, yields very similar results to the Fuji JPG too.

Yes, I just used the trial version.

Considering Fuji apparently works with Capture One and probably shares information about their curves and JPG processing I would expect it to closely match. I don't have an Adobe subscription so I cannot test that, from what I can tell they don't allow Adobe Camera RAW to be used standalone anymore either.

So, does this mean that the professional software and thousand dollar camera is lying to you? Well, possibly, it depends on how you look at it but Fuji have certainly made some decisions for you in their processing. What they are doing is clipping the blue channel and causing a hue shift or hue rotation.

This is all well and good but technically correct and ugly is still ugly. I don't disagree, the Fuji JPG output is more pleasing in my opinion as I find the jarring, harsh blue gradient to be distracting. So let's fix it in Darktable.

First, find the highlight reconstruction module and change the mode from inpaint opposed to clip highlights, then adjust the clipping threshold down until the blue highlights begin to turn cyan. I found a value of about 0.4-0.5 worked well.

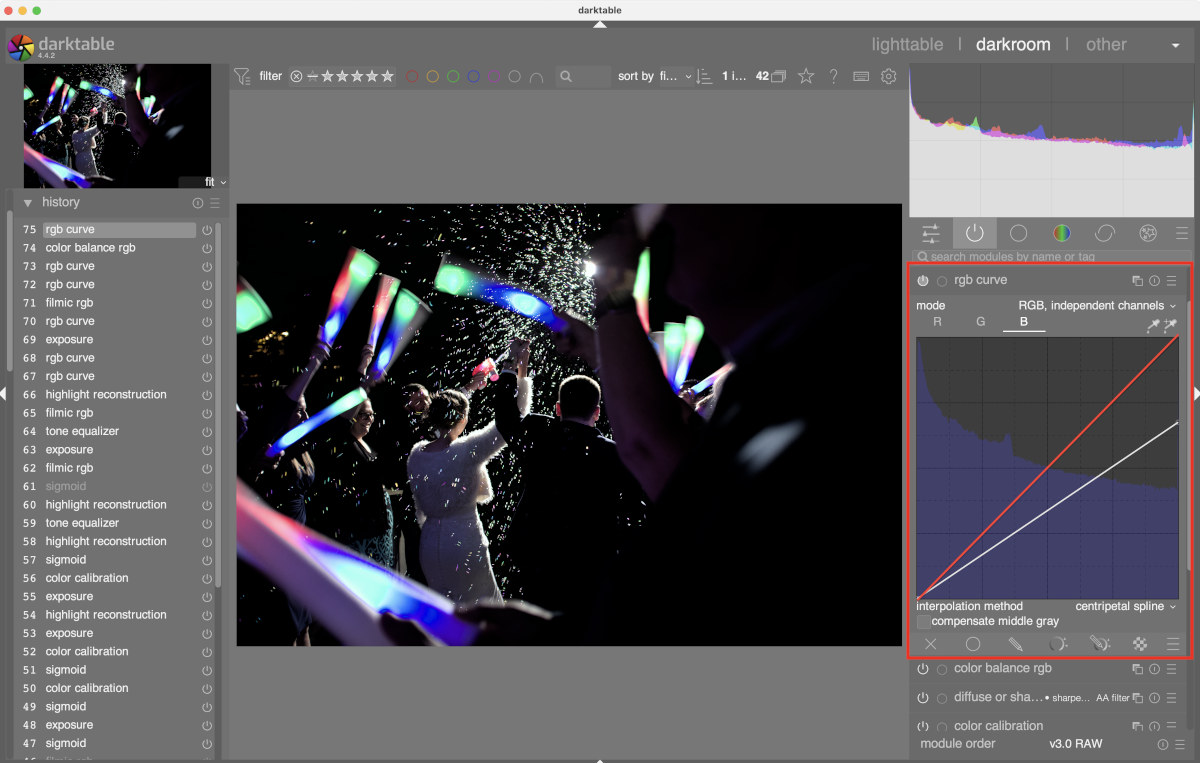

Next, find the rgb curve module and change the mode to RGB, independent channels, click on the blue channel and drag the right end of the curve down the right hand side of the module window. In this screen shot the red line represents the standard linear fit that the module starts out with. Everything between that and the white line that is pulled down has now been clipped from the image. The desired effect is achieved when the cyan or teal starts to intensify.

Then even out the curve by adding a few more points so that the gradients are smoother and less jarring. This is most noticeable in the darker parts of the cyan to blue gradient but it's still very subtle.

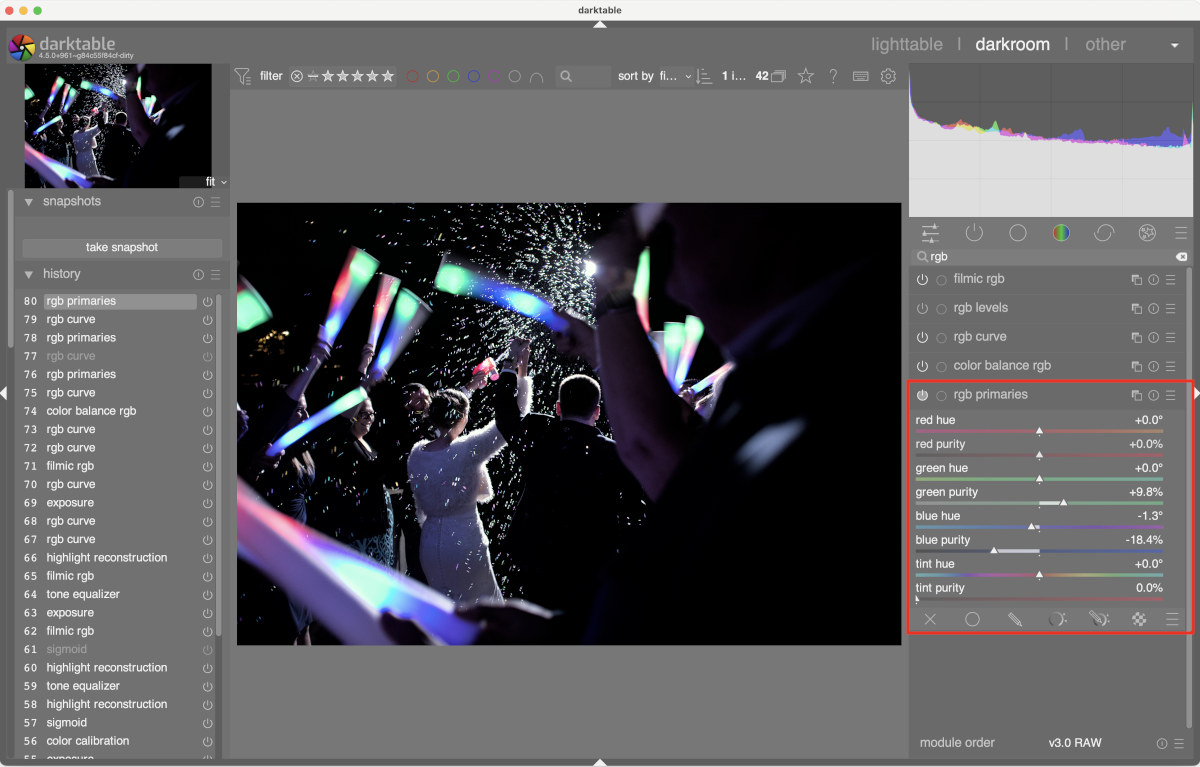

This gets the image about 90% of the way there. As a finishing step, use the rgb primaries module in the new development version of Darktable to "contaminate" the blues with some green. This new module will be available in the full 4.6 release this December. Please note that since GitHub doesn't have ARM Mac runners right now the Mac nightly build is X86_64 only. The full releases have a native ARM version and GitHub is allegedly adding ARM Mac build hosts this fall or winter. I compiled my own from from the Darktable source for Apple ARM.

After some other tweaks the image is ready for export and it's close enough to the Fuji's out of camera color treatment.

This begs the question: why not use the Fuji JPG and skip this whole business? That's a good point and if one is happy with the JPG output then that's fine. The problem comes when or if the camera does something that looks bad. Its image processor is applying a standard curve and treatment to a wide array of situations and it might not always nail it. With the RAW it's easier to adjust things in post. These days I usually shot RAW+JPG on my Fuji gear specifically because their JPG engine is just that good, but there are times when I need the malleability of the RAW. Knowing how the camera and imaging pipeline works is handy in a situations like that. Just remember that the camera JPG is not the canonical version of an image and don't get caught up in trying to represent reality exactly. Yes, the bright blue LEDs rendered by default in Darktable are closer to reality but they are ugly as sin. Hue shifting out to a softer more pastel color works well for this image. Also, trying to exactly duplicate the camera JPG is a bit of a fool's errand. It's like trying to reverse engineer a cake. It's not too difficult to get close but without an exact recipe it will never be exact.

2022 Favorite Photos

Despite 2022 being a difficult, if quiet, year for me personally and not really feeling the photography thing a whole lot I did manage to use a camera quite a bit. In no particular order here are a few photos I took that I like for one reason or the other or were noteworthy.

From June, we have synchronous fireflies around here and this way my attempt this summer in my backyard with the D850.

Also from June, some people on a rock face, Fuji X-H1.

This one isn’t very original or special but it got a lot of airtime on local and state news, the November 8th lunar eclipse, D850 this time as well.

Lastly, I have been sliding back into studio and portrait work this year but slowly. I picked up a pair of Godox LC-500Rs and have been using the RGB modes for some shots. I haven’t caught anything really super original this year on this front as it’s been slow and just sort of getting my feet back under me but I like the IRL split toning kind of look in this. Fuji X-H1 again.

Now time for some stats. This year I kept 4645 photos totalling 147GB. At least so far, I tend to do a year end cull in January so that number will likely head down. Specific camera stats below. Note this won't add up to the total due to some of those being from scanned in film, silly ancient floppy disk cameras that don't have modern metadata tags, borrowed cameras and so forth:

| Camera | Number of Photos |

|---|---|

| Nikon D850 | 1496 |

| Nikon D7500 | 573 |

| Nikon D800 | 183 |

| Nikon D1X | 53 |

| Nikon D3s | 37 |

| Fuji X-H1 | 1134 |

| Fuji X-T2 | 609 |

| Fuji x100F | 560 |

All data taken from Darktable. Look, don't judge me. I know I have a problem. It's only hording if you don't use it right?

295 photos had five stars and were edited. I only use one or five star ratings, it's either good or it's not.

My most used focal length was 23mm. I suspect that's because I use the x100f a lot and the Fuji 23mm prime often on the other two.

Age is not good proxy for technological illiteracy

Now that I'm approaching 40 in a few years the trend of equating age with technological illiteracy has really started to bug me. Most recently I've seen a few articles with respect to Congress, their advanced average age, and relying on that as a crutch to explain away their ineptitude with all things technical. It's a common trope and one that I've bought into in the past but the more experience I get the more I see it for the meme it is. Congress is bad at technology policy because of corruption, lobbies, and willful ignorance. They'd likely be very nearly this bad if the average age was 35 in my opinion.

First, I've met a fair share of Gen Z kids who I'd call incompetent with understanding the tools they use. Honestly, I'm a bit surprised that with all the screen time that kids born after the year 2000 had just how bad many of them are with basic concepts like a directory tree or just understanding what different pieces of hardware do and how they interact. Granted some of this is "standing on the shoulders of giants" in that using technology today is just a lot easier than it was 30 years ago when I was getting started. I had to know Hayes modem codes, IRQs, and TSRs. They press a button on their router for WPS or have to enter a WPA2 password in a worst case scenario. However the number of "tech geeks" in the younger generation seems rather proportional to what it was in my day despite the greater exposure to technology Gen Z has had. There are plenty of experts in Gen Z it's just not everyone under 25 years old.

Working in a university gives one exposure to a good mix of age groups as well. I know plenty of people 45 and older who know more than I do. In fact I've known a few rather tech savvy retirees who kept up with things nicely just as a hobby. There's definitely a slow down effect as one ages, I can tell you I don't pick up new languages as fast as I used to, but experience is nothing to be ignored either. Ageism is pretty rampant in the technology field overall but that's a different rant.

Technology as a subject is rather broad and deep as well. My area of expertise is generally more low level physical things. Hardware, networking, ASM, C, some Python and virtualization. For example I understand how a state machine and an ALU work but give me some higher level abstract software engineering topic and I'm not really going to follow. Likewise I've met a few brilliant machine learning experts that didn't know the difference between RAM, cache and physical storage. I don't think either of us should be called technologically illiterate just somewhat specialized. Likewise I'm not an avid smartphone user (my first gen iPhone SE still works just fine thank you) so outside of cutting most of the garbage off I don't use on it I'm really not up on that side of the world. My battery literally last days as that's how little I use it. However just because a younger person can run circles around me there doesn't mean I'm now ready to be put out to pasture or somehow technologically illiterate. Honestly, there I've just drawn a line as to where I think that tool's trade-offs not worth integrating it in my life more.

I also find large chunks of the younger generation either largely resigned to or ill-informed on the endangered species that is privacy these days and frankly that seems to be a problem at both extremes of the age spectrum. A lot of the 60+ crowd does not understand it either. I think Gen X and older Millennial are probably the most aware of what big tech and government are interested in and why it's a problem. Also, just how insidious and inside your mind social media can be. Just because a Google or Instagram and flying the flag of your pet cause does not mean they're you're friend. They just want you to hand over more that 21st century version of crude oil, data, and will tell you whatever you want to hear to get it. I don't know why, maybe it was the inherent cynicism 80s and early 90s kids grew up in but that chunk seems to have a healthy skepticism of living online overall. The point to drive home here is that you're not using these services, they're using you.

This isn't meant to be a "kids these days" rant. I also acknowledge that specific skills are different across generations. Gen Z grew up with things I did not. I just think equating younger age with greater understanding of the tools they are using is a bad move. Look at someone's credentials not their birth year before you judge them. Age is a bad proxy for "knows what they're talking about" and some of us geezers may surprise you. Likewise I know plenty of 20-somethings I respect and would call my peers. Age has little to do with it and we shouldn't overly preference one group over the other based on faulty assumptions. Ignorance knows no generational bounds and neither does curiosity.

Recent Sunrises

A few recent sunrise photos, edits in Darktabe 3.6.

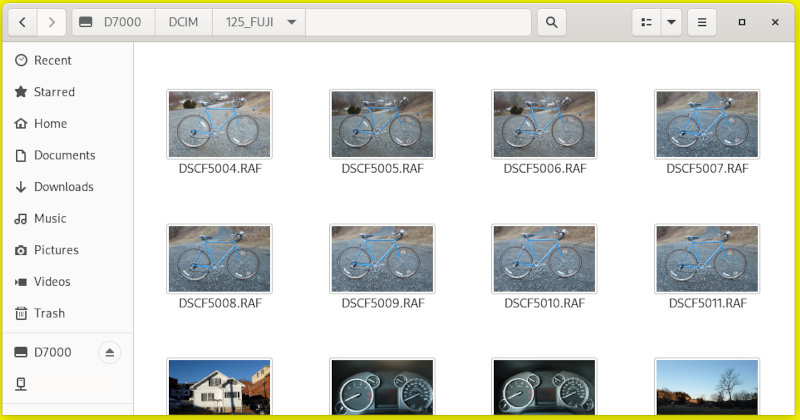

Fedora GNOME RAW Thumbnails

I've been trying to give GNOME on my desktop a fair shake the last few months as I like the way it works on my XPS 13. I've run into a few problems here and there but lacking RAW image previews in the file manager is quite the oversight in 2021. Dolphin and even Thunar do it by default in KDE Plasma and XFCE respectively. I usually run Fedora with the KDE Plasma environment on my desktop machine but the last time I tried GNOME 3 a number of years ago I seem to recall RAW thumbnails existing? Perhaps not, but here's how you fix that:

Fixing this oversight is not to terrible but does require some fiddling. First you'll need the ufraw package. Install that through the GUI Software center or through dnf:

dnf install ufraw

It'll bring a couple of dependencies with it but not too much bloat.

Next you'll need to create a file at /usr/share/thumbnailers/ufraw.thumbnailer and add the following to it:

[Thumbnailer Entry]

Exec=/usr/bin/ufraw-batch --embedded-image --out-type=png --size=%s %u --overwrite --silent --output=%o

MimeType=image/x-3fr;image/x-adobe-dng;image/x-arw;image/x-bay;image/x-canon-cr2;image/x-canon-crw;image/x-cap;image/x-cr2;image/x-crw;image/x-dcr;image/x-dcraw;image/x-dcs;image/x-dng;image/x-drf;image/x-eip;image/x-erf;image/x-fff;image/x-fuji-raf;image/x-iiq;image/x-k25;image/x-kdc;image/x-mef;image/x-minolta-mrw;image/x-mos;image/x-mrw;image/x-nef;image/x-nikon-nef;image/x-nrw;image/x-olympus-orf;image/x-orf;image/x-panasonic-raw;image/x-pef;image/x-pentax-pef;image/x-ptx;image/x-pxn;image/x-r3d;image/x-raf;image/x-raw;image/x-rw2;image/x-rwl;image/x-rwz;image/x-sigma-x3f;image/x-sony-arw;image/x-sony-sr2;image/x-sony-srf;image/x-sr2;image/x-srf;image/x-x3f;image/x-panasonic-raw2;image/x-nikon-nrw;

Close out of Nautilus and do an rm -rf ~/.cache/thumbnails/* then open a directory with RAW images. You should now see RAW photos as above. Hooray!

Granted I think Nautilus is one of GNOME's weak points, especially compared to what's available in other Linux desktop environments. Nautilus seems neglected and at times it even makes me miss the notoriously bare-bones Mac OS Finder. GNOME excels in a number of areas this makes the mediocrity of the file manager stick out. Split windows like Dolphin and more view mode options instead of just "icons" and "list" would be a good start.

RAW thumbnailing really should be in the base install of any desktop distribution using GNOME. It's in literally everything else (except Windows 10 apparently) and has been for years. If you're running GNOME you're probably looking for more of a modern desktop experience instead of a lean and slim install anyway so a few more megabytes of software and a configuration file won't make that much more difference.

Peak Linux Desktop

We are in the peak Linux desktop era and it might be downhill for a while. When I say peak Linux desktop era I mean you can pick up nearly any machine off the shelf, install Fedora, Ubuntu, Mint, Debian or Manjaro as they are and get straight to work without fiddling with the computer itself. There are some corner cases where things are more difficult but even nVidia PRIME works well these days. In my opinion the Linux desktop is basically at parity with the proprietary options in terms of "just works." You can boot the machine up, do the initial create your account and sign into your cloud stuff and boom you're done just like any other desktop OS. The image of Linux being about messing with the computer is really outdated. It's a good productivity tool, as much so as Windows or macOS in my opinion. Yes it's different and takes some adjustment to the new workflow, just as if one were to switch from Windows to Mac or vice-versa, but it's not inherently broken. No you don't have to touch the CLI for anything on the major desktop environments if you don't want to.

A lot of this is due to monumental efforts on the part of volunteers but also companies like Red Hat and Canonical. However there are two major players who have contributed huge amounts of code, time and funding to the Linux ecosystem that people seem to forget about: AMD and Intel. Intel is a major part of the reason why modern USB standards work on Linux and AMD has documented and open sourced their graphics drivers. Intel and AMD pay developers to work on this, upstream code into the kernel and have generally been decent community members. Even with all their flaws we owe them some thanks for Linux hardware support being where it is along with the fleet of volunteers maintaining things at organizations like freedesktop.org.

Late last year Apple released ARM based Macs. Microsoft has just announced a translation layer to allow X64 code to run on ARM on Windows and has shipped ARM machines for years. Many OEMs have been shipping ARM Chromebooks for a while too. The Apple announcement came with their usual showmanship and is going to make the rest of the world take notice. Like everything else Apple does the rest of the industry will be tripping over themselves to follow suite. I wouldn't want to be Intel or AMD right now or be holding their stock. ARM is likely the future for most devices outside of enthusiast desktops or legacy applications. I think that even enthusiast desktop platforms will switch over, but that's just my opinion. Yes I realize Chromebooks are technically Linux as is Android. This is more about the FreeDesktop type of future. Chromebooks and smart phones are still very locked down devices and do not support the full range of what the Linux desktop today can.

This brings us back to peak Linux desktop. Linux runs on armhf and arm64 just fine. The problem is the ARM ecosystem is a mess of cobbled together proprietary things. Even if you aren't dealing with a locked bootloader power management, boot processes, IO and graphics varies tremendously from one ARM platform to the other. Unlike X86 and X64 where UEFI, ACPI and other well documented standards are implemented across nearly the entire range. There are very few ARM vendors working on open sourcing and upstreaming support for their platforms into the Linux kernel either. Even the beloved Raspberry Pi relies on out-of-tree patches for full support. Most of the other consumer facing ARM boards out there for Linux rely on reverse engineering in some part or the other, even if just for the Mali graphics.

There is hope, a standard called ARM ServerReady mandates UEFI and ACPI for compliance. This solves the boot process and power management problem. Despite the data center centric name this standard works fine on desktop and laptops as well. Microsoft's Surface products used ARM ServerReady as does the ARM based Lenovo Yoga. Tyan and Gigabyte have been selling a range of ARM servers that fully support it as well. Indeed you can grab an arm64 Debian installer and slap it right on the Tyan and Gigabyte machines. They work great as long as you don't need graphics.

Alas, this only solves part of the problem though as you're still dealing with a lack of driver support. Mali graphics have a reverse engineered driver that has been mostly accepted into the kernel the last time I checked but like most reverse engineered things it's usable not not fully featured. A very similar feeling to the Nouveau driver for nVidia cards. Most ARM options for Linux on the desktop or mobile right now are low performance patchwork things like this.

What we need is an ARM vendor to step up like Intel and AMD have and work hard on upstreaming support into the Linux kernel for all their hardware and ServerReady to become the default ARM platform. Huawei is probably the closest on this as the Chinese tech sector is ditching western software firms in favor Deepin and Ubuntu Linux. I think a lot of the FUD about Huawei hardware is just US government sabre rattling. I've seen no proof of it and honestly these days it's pick your backdoor if you're using anything from a Five Eyes country anyway. Until someone starts working hard on upstreaming support and ServerReady becomes the defacto standard Linux on ARM is going to be a mess and will revert back to hobbyist only territory for the desktop. I've been using Linux on and off since the late '90s and early '00s. I remember the good-old-bad-old days before Intel and AMD got onboard and I don't want to go back. That's where the "4 hours and 6 kernel recompiles to get your network card to connect" meme came from. I've got other things to get done now and cannot spend that kind of time minding my machine.

Don't get me wrong, I don't think the Linux desktop is going anywhere. But I think the mid-future is more like the Raspberry Pi or PineBook Pro and less like a ThinkPad or XPS with Fedora, Mint or Ubuntu on it. It will be a tool for people to create one off projects on, not the robust desktop we have today. There may be a open, performant, upstreamed and widely available ARM platform for Linux in the future but I think in the meantime were in for a decade of pain. Again, I could be wrong, that happened once before in the 80s.